Key Components of Data Platform Engineering

Data platform engineering is a crucial aspect of modern-day data science and analytics. With the increasing volume, variety, and velocity of data, businesses are looking for efficient ways to store, process, and analyze data. Data platform engineering is the process of designing, building, and maintaining a data platform that can handle large volumes of data, enable efficient data processing and storage, and provide robust data analytics capabilities.

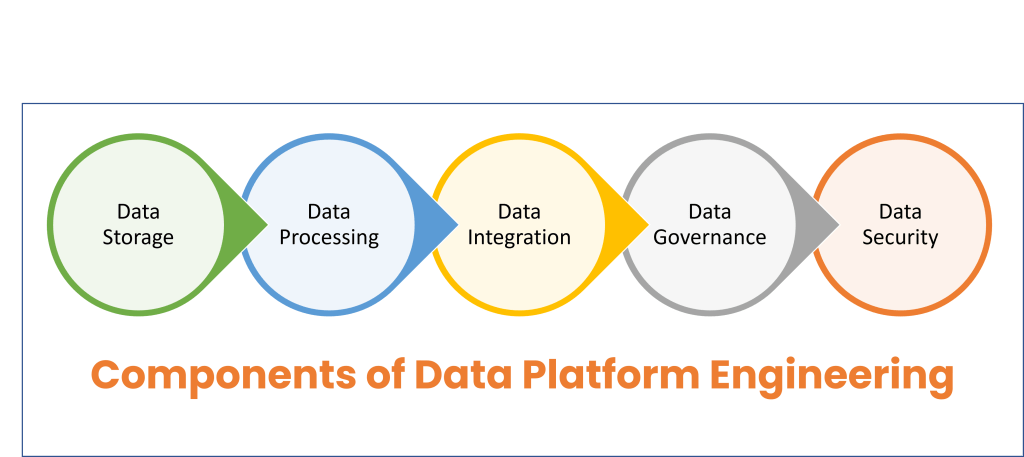

The process of data platform engineering involves several components, including data storage, data processing, data integration, data governance, and data security. Let’s take a closer look at each of these components.

Data Storage:

The first step in data platform engineering is choosing the right storage system for your data. You need to decide whether to use a traditional database system or a big data platform like Hadoop or Spark. While traditional database systems are good for structured data, big data platforms can handle both structured and unstructured data. When choosing a storage system, you also need to consider factors like scalability, performance, and cost.

Data Processing:

Once you have your data stored, you need to process it to extract insights. Data processing involves several steps, including data transformation, data cleaning, data aggregation, and data enrichment. You can use tools like Apache Kafka, Apache Storm, or Apache Flink for real-time data processing or Hadoop MapReduce for batch processing. The key is to choose the right tool for the type of data you have and the insights you want to extract.

Data Integration:

Data integration is the process of combining data from different sources into a single view. This can be challenging because data can come from various sources, including databases, APIs, social media, and IoT devices. You need to ensure that the data is accurate, consistent, and up-to-date. To do this, you can use tools like Apache Nifi or Talend to integrate data from multiple sources and transform it into a usable format.

Data Governance:

Data governance is the process of managing the availability, usability, integrity, and security of data. It involves defining policies, processes, and standards to ensure that data is used and managed effectively. You need to have a clear understanding of who owns the data, who has access to it, and how it is used. You can use tools like Apache Ranger or Apache Atlas to manage data governance.

Data Security:

Data security is a critical component of data platform engineering. With the increasing number of data breaches, it is essential to ensure that your data is secure. You need to implement measures like encryption, access controls, and firewalls to protect your data from unauthorized access. You can use tools like Apache Metron or Apache Knox to secure your data.

Data platform engineering is an essential process for any business that wants to leverage the power of data analytics. It involves designing, building, and maintaining a data platform that can handle large volumes of data, enable efficient data processing and storage, and provide robust data analytics capabilities. To build an effective data platform, you need to consider factors like data storage, data processing, data integration, data governance, and data security. With the right tools and processes in place, you can unlock the full potential of your data and drive better business outcomes.

Conclusion

Data platforms are key to understanding, governing, and accessing your organization’s data. It comes down to what you want to do with your data and how you want to do it. Whether you build a customer data platform, a big data platform, or use an operational data platform like MongoDB, Atlas, Apache Hadoop, Cloudera, Apache Spark, Databricks, or Snowflake, complex cloud big data platforms refer to cloud-based services offered by the major cloud providers Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure. They are designed for processing and analyzing large, complex data sets, and data platforms can unlock the potential.

Reach out to Niograph today to discuss custom data platforms for your organization. Our services range from on-premise to cloud-base data platforms, batch to real-time data processing, pre-integrated commercial solutions to modular, best-of-breed platforms, point-to-point to decoupled data access, enterprise warehouse to domain-based architecture, and rigid data models to flexible, extensible data schemas.